Link Layer & LANs

Module 6 - Link Layer & LANs

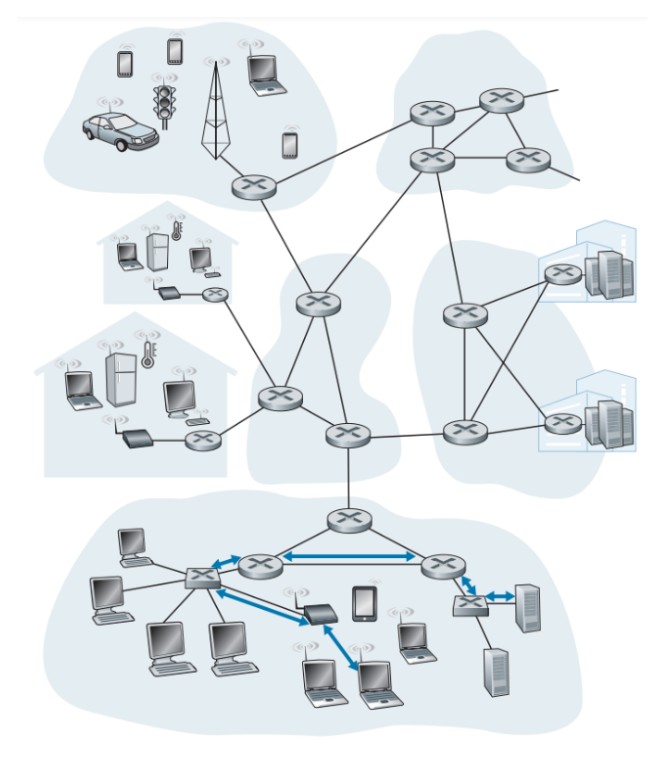

Nodes refer to devices that run a link-layer protocol, such as hosts, routers, switches, and WiFi access points. Links are the communication channels connecting adjacent nodes along the communication path. To transfer a datagram from a source host to a destination host, it must pass through each individual link in the end-to-end path. A transmitting node encapsulates the datagram in a link-layer frame and transmits it over the link.

The link layer, also known as the data link layer or Layer 2, is the lowest layer in the Internet protocol suite and the networking architecture of the Internet. It is responsible for node-to-node data transfer across the physical layer, handling error detection and correction, and establishing links between adjacent network nodes. Here is an example of a six link-layer hops between wireless host and server.

The link layer is implemented in a network adapter, also known as a network interface controller (NIC). It is implemented on a chip called the network adapter, which handles various link layer services such as framing, link access, and error detection. The link layer is a combination of hardware and software, with the hardware part implemented in the network adapter and the software part running on the host's CPU.

The Services Provided by the Link Layer

A link-layer protocol offers several services:

- Framing It packages network-layer data into a frame with a data field and header fields, following the structure specified by the protocol.

- Link access For point-to-point links, the medium access control (MAC) protocol allows the sender to transmit a frame when the link is idle. In the case of multiple nodes sharing a broadcast link, the MAC protocol coordinates frame transmissions.

- Reliable delivery Some link-layer protocols ensure error-free movement of network-layer data across the link. This is achieved through acknowledgments and retransmissions, similar to reliable delivery in transport-layer protocols like TCP. It is useful for error-prone links, like wireless ones, to correct errors locally.

- Error detection and correction Link-layer protocols provide mechanisms to detect and correct bit errors introduced by signal attenuation and electromagnetic noise. Transmitters include error-detection bits in frames, and receivers perform error checks to detect and correct errors.

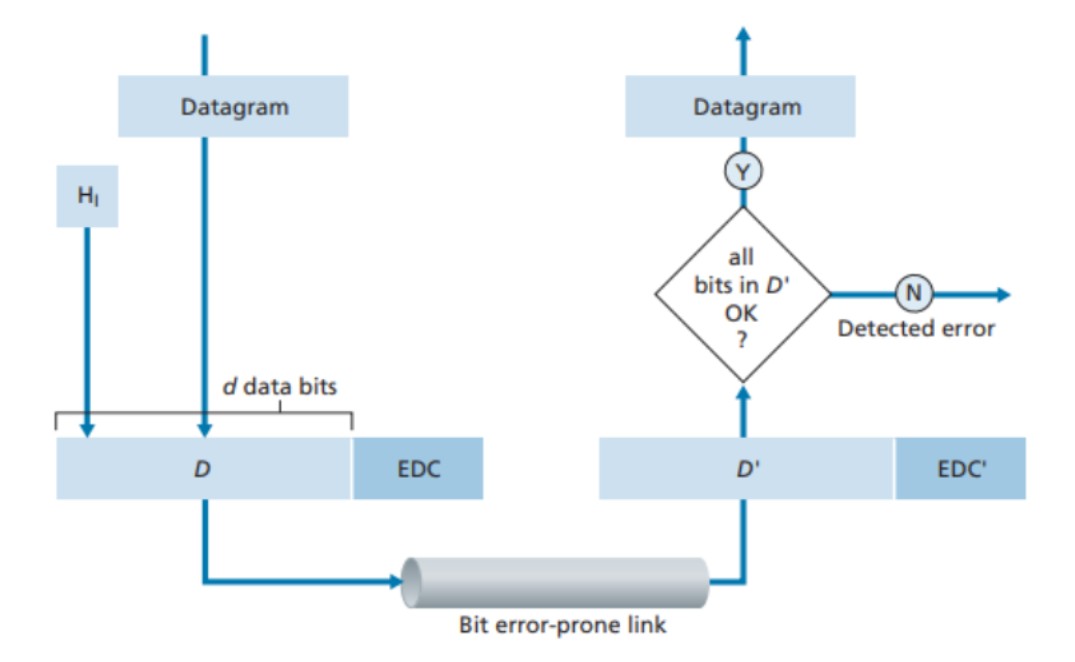

Example of a error-detection and correction scenario:

This picture above shows the setup. The sending node adds error-detection and -correction bits (EDC) to the data (D) to protect against bit errors. This includes the datagram from the network layer and additional information in the link frame header. The combined D and EDC are sent to the receiving node as a link-level frame. At the receiving node, a sequence of bits D' and EDC' is received, which may differ from the original D and EDC due to bit flips during transmission.

The receiver's task is to determine if D' is the same as the original D, based on the received D' and EDC'. The decision made by the receiver is whether an error is detected, not whether an error has occurred. Error-detection and -correction techniques can sometimes identify bit errors, but they are not foolproof. There may still be undetected bit errors, meaning the receiver might not be aware of the presence of errors in the received information.

Multiple Access Links and Protocols

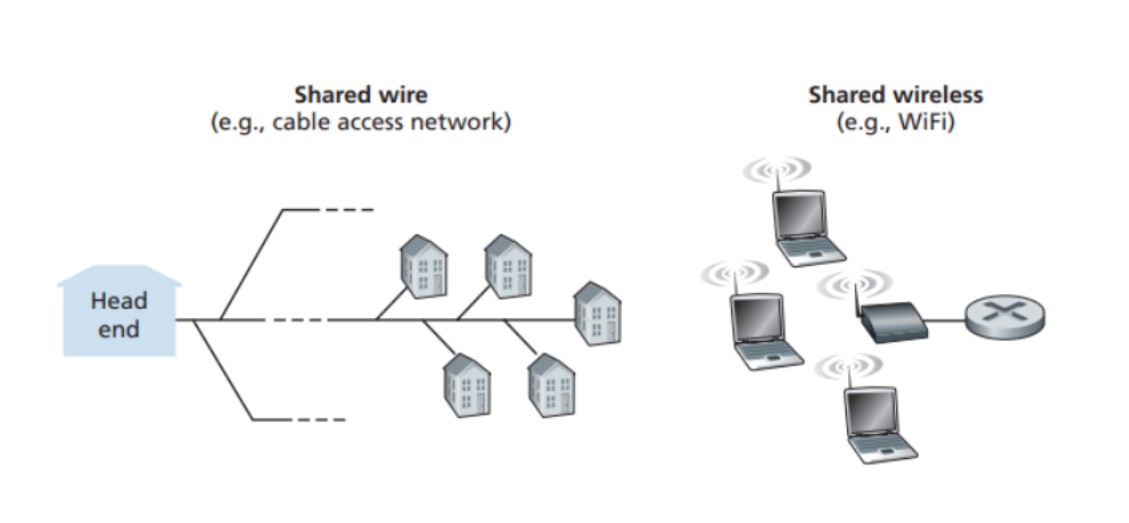

In computer networks, there are two types of links: point-to-point links and broadcast links. Point-to-point links have a single sender and receiver, while broadcast links allow multiple nodes to send and receive on a shared channel. Examples of broadcast links include Ethernet and wireless LANs.

The challenge with broadcast links is coordinating the access of multiple nodes to the shared channel. This is known as the multiple access problem.

Computer networks use multiple access protocols to regulate transmission on the shared broadcast channel. These protocols ensure fair and efficient access to the channel. Collisions can occur when multiple nodes transmit simultaneously, leading to wasted bandwidth. Coordinating transmissions is the responsibility of the multiple access protocol.

There are three main categories of multiple access protocols: channel partitioning protocols, random access protocols, and taking-turns protocols. These protocols have been extensively studied and implemented in various link-layer technologies.

The ideal multiple access protocol should provide efficient throughput for single and multiple nodes, be decentralized to avoid single points of failure, and be easy to implement.

Switched Local Area Networks

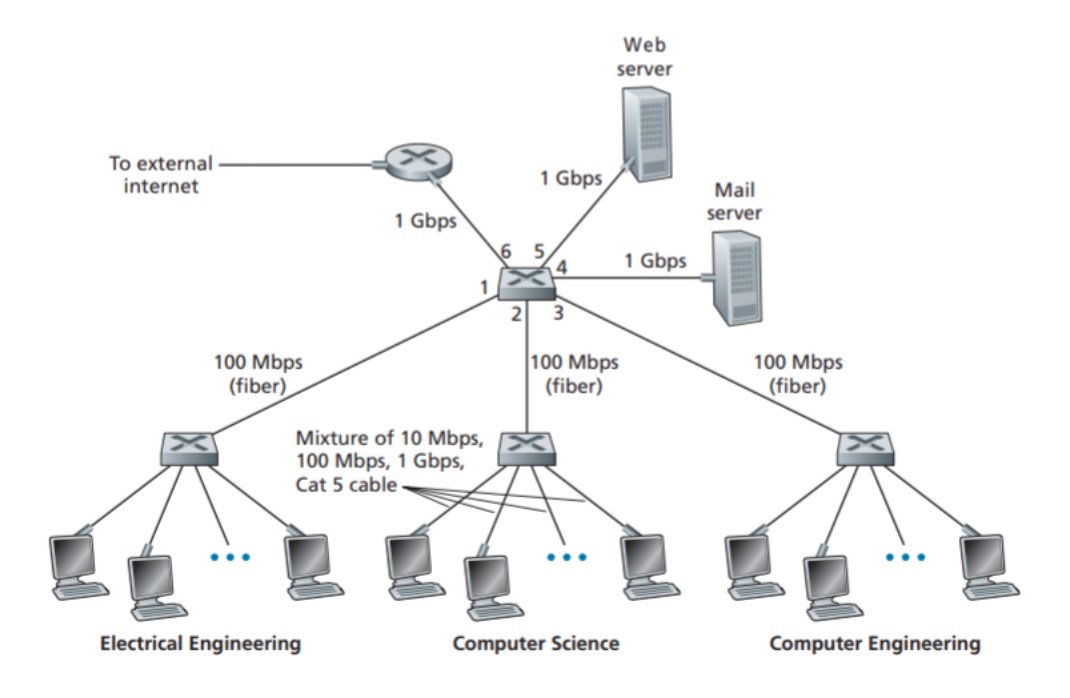

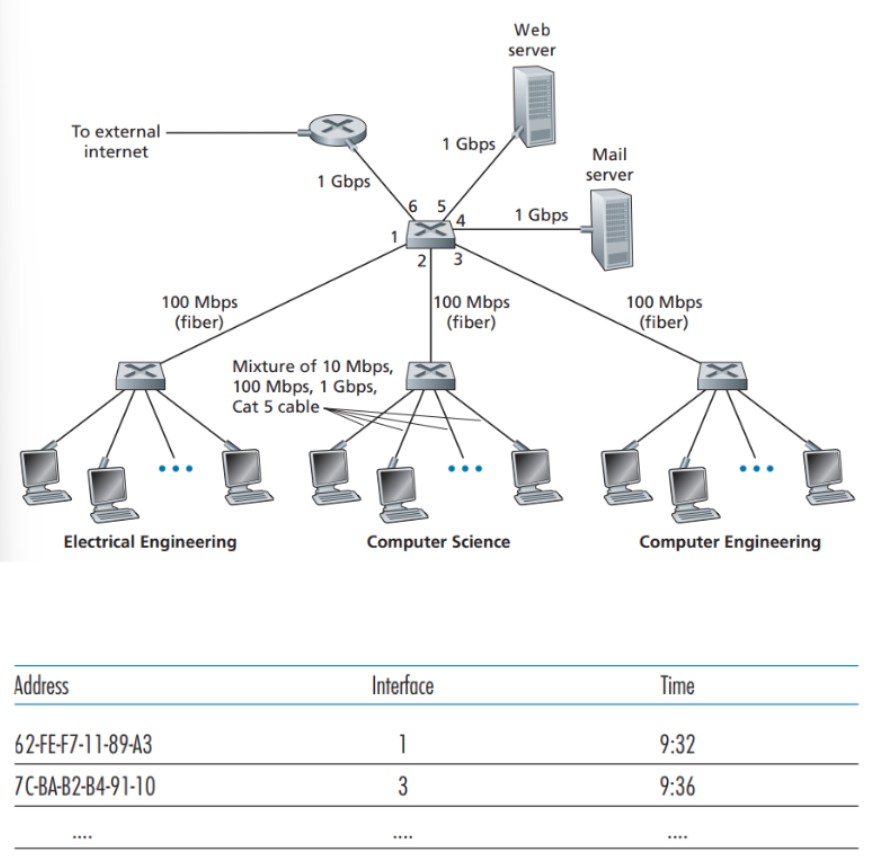

This image above of an institutional network connected together by four switches illustrates a switched local network that connects various departments, servers, a router, and switches.

Switches in these networks operate at the link layer, meaning they handle link-layer frames rather than network-layer datagrams. They don't recognize network-layer addresses or use routing algorithms like OSPF. Instead, they use link-layer addresses to forward frames through the network of switches.

Link-Layer Addressing and Address Resolution Protocol (ARP)

MAC Addresses

Link-layer addresses, also known as MAC addresses, are associated with network adapters rather than hosts and routers themselves. Each host or router can have multiple link-layer addresses. However, link-layer switches do not have link-layer addresses associated with their interfaces. MAC addresses are typically 6 bytes long and expressed in hexadecimal notation. They are unique, and their allocation is managed by the IEEE. MAC addresses have a flat structure and do not change when the adapter moves.

When sending a frame, the source adapter includes the destination adapter's MAC address in the frame. Upon receiving a frame, an adapter checks if the destination MAC address matches its own and processes the frame accordingly. However, there are cases when a sending adapter wants all other adapters on the LAN to receive the frame. In such cases, the sending adapter inserts a special MAC broadcast address into the destination address field of the frame. For LANs that use 6-byte addresses, such as Ethernet and 802.11, the broadcast address is represented by a string of 48 consecutive 1s (FF-FF-FF-FF-FF-FF in hexadecimal notation).

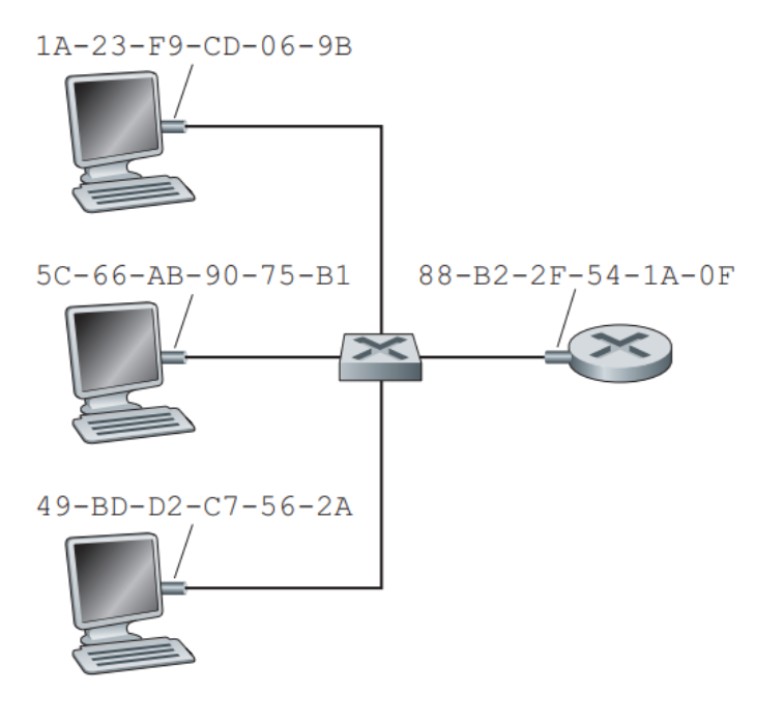

The figure above shows that each interface connected to a LAN has a unique MAC address.

Address Resolution Protocol (ARP)

The Address Resolution Protocol (ARP) is responsible for translating network-layer addresses (such as IP addresses) to link-layer addresses (MAC addresses). ARP is used within a subnet to resolve the MAC address of a destination host or router given its IP address. Hosts and routers maintain an ARP table that maps IP addresses to MAC addresses. When a sender wants to send a datagram to a destination without an entry in its ARP table, it sends an ARP query packet as a broadcast frame to all hosts and routers on the subnet. The recipient with a matching IP address responds with an ARP packet containing the desired MAC address. The sender updates its ARP table and sends the datagram encapsulated in a link-layer frame with the destination MAC address obtained from the response. ARP is considered a protocol that straddles the boundary between the link and network layers, as it contains both link-layer and network-layer addresses.

Sending a Datagram off the Subnet

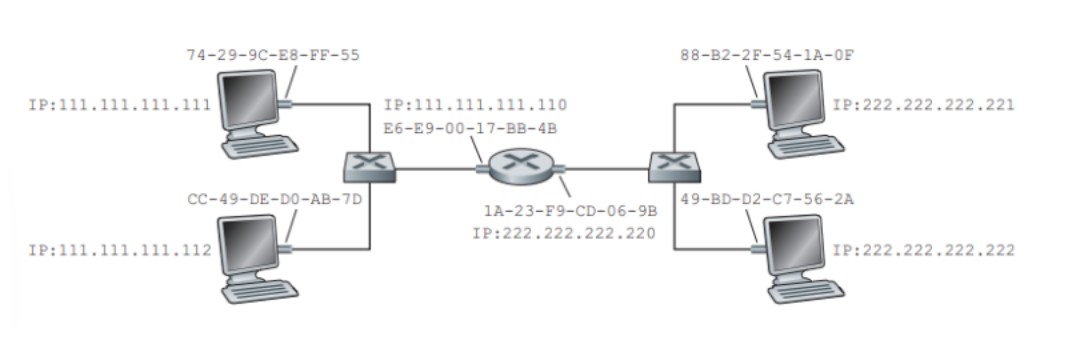

When a host on one subnet wants to send a network-layer datagram to a host on another subnet, the ARP protocol is still used. In the given network configuration, each host has one IP address and one adapter, while the router has multiple interfaces, each with its own IP address, ARP module, and adapter. To send a datagram from a host on Subnet 1 to a host on Subnet 2, the sending host must provide its adapter with the appropriate destination MAC address. Contrary to intuition, the destination MAC address should be that of the router interface on Subnet 1, not the MAC address of the destination host. The sending host obtains the MAC address of the router interface through ARP and sends the datagram encapsulated in a frame to the router. The router then consults its forwarding table to determine the correct interface for forwarding the datagram to the destination subnet. The router obtains the destination MAC address for the final leg of the journey through ARP. ARP for Ethernet is defined in RFC 826, and further details can be found in RFC 1180. This picture below shows two subnets interconnected by a router.

Ethernet

Ethernet has become the dominant wired LAN technology due to various reasons. It was the first widely adopted high-speed LAN and network administrators were reluctant to switch to other technologies. Alternatives like token ring, FDDI, and ATM were more complex and expensive. Ethernet consistently improved its data rates to compete with other technologies and introduced switched Ethernet for even higher speeds. Additionally, Ethernet hardware became inexpensive and widely available. The original Ethernet LAN used a bus topology but later shifted to a hub-based star topology, where all nodes are connected to a hub. In this configuration, the hub broadcasts received bits to all interfaces, causing collisions. In the early 2000s, Ethernet evolved further with the introduction of switches, which eliminated collisions and acted as store-and-forward packet switches operating at layer 2.

Ethernet Frame Structure

The Ethernet frame is a crucial part of Ethernet technology. When sending an IP datagram over an Ethernet LAN, the frame includes fields like data, destination address, source address, type, CRC, and preamble. The data field carries the IP datagram up to a maximum size of 1,500 bytes. The destination address field holds the MAC address of the receiving adapter, while the source address field contains the MAC address of the transmitting adapter. The type field enables Ethernet to support various network-layer protocols. The CRC field helps detect bit errors in the frame, and the preamble field synchronizes clocks between sender and receiver. Ethernet offers a connectionless and unreliable service to the network layer, similar to IP and UDP protocols.

Ethernet Technologies

Ethernet technology has different versions and standards with acronyms like 10BASE-T, 10BASE-2, 100BASE-T, 1000BASE-LX, 10GBASE-T, and 40GBASE-T. These standards define the speed, baseband nature, and physical media used. Ethernet has evolved from bus-topology designs to point-to-point connections using copper wires or fiber cables. Gigabit Ethernet offers a high data rate of 40,000 Mbps and is compatible with older Ethernet standards. It follows the standard frame format, supports point-to-point and broadcast channels, and allows simultaneous data transmission. Although collisions are rare in modern Ethernet LANs with switches, the Ethernet MAC protocol is still included. The Ethernet frame format has remained consistent over the years, serving as the core of the Ethernet standard.

Link-Layer Switches

A switch receives incoming link-layer frames and forwards them to outgoing links. It operates transparently to the hosts and routers in the network, meaning they address frames to other hosts/routers without knowing that the switch is involved. Sometimes, the rate at which frames arrive at a switch's output interface can exceed its capacity. To handle this, switch output interfaces have buffers, similar to routers. Now, let's delve into how switches work in more detail.

Forwarding and Filtering

Filtering and forwarding in a switch are facilitated by a switch table, which contains entries for hosts and routers on a LAN. Each entry in the table consists of three components: the MAC address, the switch interface leading to that MAC address, and the timestamp indicating when the entry was added.

Switches forward frames based on MAC addresses, unlike routers which use IP addresses. When a frame arrives with a destination MAC address, the switch checks the switch table. There are three possible scenarios:

- No entry for the destination address: The switch broadcasts the frame to all interfaces except the incoming one.

- Entry for the destination address with the same interface: The frame is discarded since it is coming from the same LAN segment.

- Entry for the destination address with a different interface: The frame is forwarded to the corresponding interface's output buffer.

To illustrate the process, let's consider the uppermost switch in the picture above and refer to the switch table shown below the picture. Suppose a frame with the destination address 62-FEF7-11-89-A3 arrives at the switch through interface 1 (connected to Electrical Engineering). When the switch checks its table, it finds that the destination is on the LAN segment connected to interface 1. Since the frame has already been broadcasted on that segment, the switch performs filtering by discarding the frame.

Now, imagine the same frame arrives from interface 2. The switch examines its table and discovers that the destination is associated with interface 1. In this case, the frame needs to be forwarded to the LAN segment connected to interface 1. The switch carries out the forwarding function by placing the frame in the output buffer preceding interface 1.

This example demonstrates that with a complete and accurate switch table, frames can be forwarded directly to their destinations without the need for broadcasting. Switches operate in a more intelligent manner compared to hubs. However, the process of configuring the switch table initially and whether link-layer routing protocols exist to automate this configuration remain important considerations.

Self-learning

A switch can automatically learn and build its table without any manual configuration. Here's how it works:

- At the beginning, the switch table is empty.

- When a frame arrives on an interface, the switch saves three important details in its table: the MAC address of the frame's source, the interface it came from, and the current time. This helps the switch associate the sender with their LAN segment. As more hosts send frames, the table gets populated.

- If no frames are received with a specific MAC address as the source within a certain time period (aging time), the switch removes that address from the table. This ensures that if a PC is replaced, its old MAC address will be eventually forgotten by the switch.

Switches vs Routers

Switches and routers are both types of packet switches, but they differ in how they forward packets. Routers use network-layer addresses (IP addresses) to forward packets, while switches use MAC addresses. Switches are considered layer-2 packet switches, while routers are layer-3 packet switches.

Switches have the advantage of being plug-and-play devices, which makes them easy to set up. They can also achieve high filtering and forwarding rates since they only process up to layer 2 of the OSI model. However, switches are limited to a spanning tree topology to prevent broadcast frame cycling. Large switched networks require large ARP tables, leading to increased ARP traffic and processing. Switches are also vulnerable to broadcast storms, where excessive broadcast frames can cause network-wide disruptions.

On the other hand, routers offer hierarchical network addressing, allowing packets to find the best path between source and destination, even in networks with redundant paths. Routers do not have the spanning tree restriction, enabling the creation of complex network topologies. They provide firewall protection against layer-2 broadcast storms. However, routers require IP addresses to be configured, making them less plug-and-play compared to switches. Routers also have higher per-packet processing time as they handle layer-3 fields.

In deciding whether to use switches or routers in a network, small networks with a few hundred hosts can benefit from switches, as they provide localized traffic and increased throughput without requiring IP address configuration. Larger networks with thousands of hosts typically incorporate routers in addition to switches. Routers ensure robust traffic isolation, control broadcast storms, and offer more intelligent routing among hosts.

Link Virtualization

In this chapter, the concept of link virtualization and the evolution of our understanding of links are explored. Initially, links were considered as physical wires connecting two hosts. However, it was discovered that links can be shared among multiple hosts using various media such as radio spectra. The examination of Ethernet LANs revealed that the interconnecting media could be a complex switched infrastructure. Despite these advancements, hosts still perceive the interconnecting medium as a link-layer channel.

Moving on, dial-up modem connections were discussed, where the link between two hosts is the telephone network—a separate global network with its switches, links, and protocols. From the perspective of the Internet, the telephone network is virtualized as a link-layer technology connecting Internet hosts. This virtualization is similar to how overlay networks view the Internet as a means of providing connectivity between overlay nodes.

Next, Multiprotocol Label Switching (MPLS) networks were explored. MPLS is a packet-switched, virtual-circuit network that enhances the forwarding speed of IP routers by using fixed-length labels. These labels are employed to forward packets based on labels rather than destination IP addresses. MPLS operates alongside IP, leveraging IP addressing and routing. MPLS-capable routers, also known as label-switched routers, forward MPLS frames by looking up the MPLS label in their forwarding table. MPLS networks interconnect IP devices, similar to switched LANs or ATM networks.

The MPLS header format and the requirement for MPLS-capable routers to transmit MPLS frames were discussed. Routers exchange information about labels and destinations to determine how to forward MPLS frames. MPLS signaling and path computation algorithms are utilized to establish and manage MPLS paths among routers. MPLS offers advantages in traffic management and enables traffic engineering, allowing network operators to control the flow of traffic along specific paths.

Data Center Networking

Data center networking is an important part of how internet companies like Google, Microsoft, Amazon, and Alibaba operate. These companies have huge data centers that house thousands to hundreds of thousands of computer hosts. These data centers not only connect to the internet, but they also have their own internal computer networks called data center networks.

Data centers serve three main purposes. First, they deliver content like web pages, search results, emails, and streaming videos to users. Second, they act as powerful computing systems for handling large-scale data processing tasks, such as calculating search engine indexes. Third, they offer cloud computing services to other companies. Nowadays, many companies choose to use cloud providers like Amazon Web Services, Microsoft Azure, or Alibaba Cloud to take care of all their IT needs.

Retrospective: A Day in the Life of a Web Page Request

Imagine a student named Bob who wants to download a web page, like the home page of Google. It may seem like a simple task, but there are actually many protocols involved in making it happen.

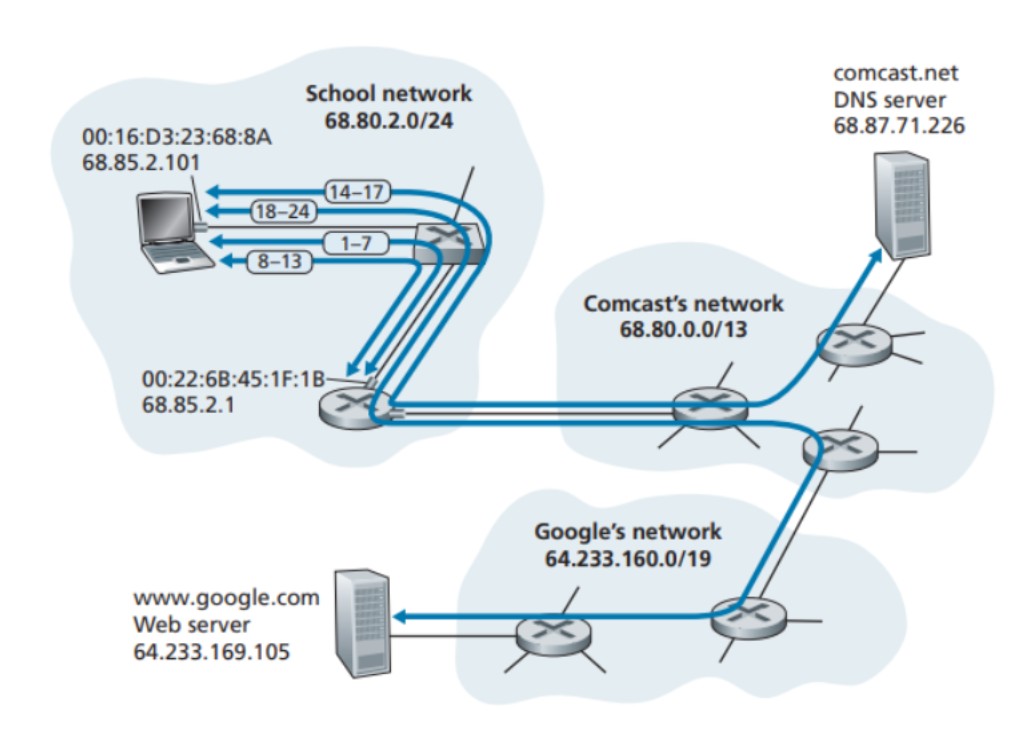

In the chapter, there is a diagram (see the picture above) that shows Bob connecting his laptop to the school's Ethernet switch and downloading the web page. We learn that behind the scenes, there are numerous protocols working together to ensure Bob can access the web page successfully.

DHCP, UDP, IP, Ethernet

To do anything on the network, like downloading a web page, Bob's laptop needs an IP address. Here's an explanation of what happens:

- Bob's laptop sends a request using the DHCP protocol to obtain an IP address from the local DHCP server. This request is sent as a message within a UDP segment with specific port numbers.

- The request message is placed within an Ethernet frame and broadcasted to all devices connected to the switch.

- The router receives the broadcasted request and extracts the message from the Ethernet frame. It processes the message and prepares a response.

- The DHCP server within the router assigns an IP address to Bob's laptop and creates a response message (ACK) containing the assigned IP address, DNS server IP address, default gateway IP address, and subnet block information.

- The response message is put inside an Ethernet frame and sent back to Bob's laptop.

- The switch directs the response frame specifically to Bob's laptop based on its MAC address.

- Bob's laptop receives the response, extracts the information from the frame, and records its IP address, DNS server IP address, and default gateway IP address. It initializes its networking components and is ready to use the network.

This process ensures that Bob's laptop can connect to the network and perform tasks like downloading web pages. It simplifies the steps involved in obtaining an IP address through DHCP and setting up the necessary network configurations.

DNS and ARP

- Bob's laptop creates a DNS query message, including the URL "www.google.com," and puts it in a UDP segment. The UDP segment is then placed in an IP datagram with the DNS server's IP address (68.87.71.226) as the destination and Bob's laptop's IP address (68.85.2.101) as the source.

- Bob's laptop encapsulates the DNS query datagram in an Ethernet frame and sends it to the gateway router. However, Bob's laptop doesn't know the MAC address of the gateway router (68.85.2.1) yet, so it needs to use the ARP protocol to find it.

- Bob's laptop sends an ARP query message within an Ethernet frame with a broadcast destination address. The frame is delivered to all connected devices, including the gateway router.

- The gateway router receives the ARP query and recognizes that its own IP address (68.85.2.1) matches the target IP address. It responds with an ARP reply message, stating that its MAC address (00:22:6B:45:1F:1B) corresponds to the IP address 68.85.2.1. The reply is placed in an Ethernet frame with Bob's laptop as the destination and is sent back through the switch to Bob's laptop.

- Bob's laptop receives the ARP reply frame and extracts the MAC address of the gateway router (00:22:6B:45:1F:1B) from the message.

- Now, Bob's laptop can address the Ethernet frame containing the DNS query to the MAC address of the gateway router. The IP datagram within the frame has the DNS server's IP address (68.87.71.226) as the destination, while the frame itself has the gateway router's MAC address (00:22:6B:45:1F:1B) as the destination. Bob's laptop sends this frame to the switch, which delivers it to the gateway router.

Intra-Domain Routing to the DNS Server

- The gateway router receives the DNS query and sends it to the next router in the network.

- The next router receives the query, determines where to send it based on its routing table, and forwards it accordingly.

- The DNS server receives the query, looks up the requested website in its database, and sends back a response with the corresponding IP address.

- Bob's laptop receives the response, extracts the IP address of the website, and is now ready to connect to the desired server.

Web Client-Server Interaction: TCP and HTTP

- Bob's laptop wants to connect to www.google.com, so it creates a special connection called a TCP socket. To start the connection, Bob's laptop sends a message called TCP SYN to www.google.com. This message is put inside a box called an IP datagram and addressed to www.google.com. The box is then put inside another box called a frame and sent to the gateway router.

- The routers in the school network, Comcast's network, and Google's network help send the message from Bob's laptop to www.google.com using their routing tables. These tables tell the routers how to forward the message towards www.google.com. The BGP protocol helps determine the routing between Comcast and Google networks.

- The TCP SYN message from Bob's laptop reaches www.google.com. The message is received, and a connection socket is created for the TCP connection between Google's server and Bob's laptop. A response called TCP SYNACK is generated and sent back to Bob's laptop.

- The TCP SYNACK message travels through the networks and reaches Bob's laptop. It is received and assigned to the TCP socket created earlier, establishing the connection.

- Bob's browser prepares an HTTP GET message with the URL it wants to fetch. The message is written into the socket and sent to www.google.com.

- The HTTP server at www.google.com receives the HTTP GET message, processes it, and creates an HTTP response message with the requested web page content. The response is sent back to Bob's laptop.

- The HTTP response message travels back through the networks and reaches Bob's laptop. Bob's web browser reads the response, extracts the webpage content, and finally displays the web page.